Automated Data Processing: How LLMs with Tools Simplify Data Wrangling

Check out our open source project FFMPerative and try the Remyx Engine today!

We built the Remyx Model Engine to make it straightforward for anybody to create computer vision models regardless of access to data & compute resources or machine learning expertise.

But model training is a tiny part of the machine learning (ML) lifecycle, and a discipline around systematizing the development and maintenance of ML applications has emerged called MLOps.

We needed to make model deployment as easy as training with the Remyx Engine, including the convenience of working through natural language. And so we were excited to find HuggingFace’s announcement about Transformer Agents and Tools.

Fantastic libraries like LangChain and Guidance are popular choices to help tame the results of LLMs, but it’s easy to bundle complex workflows into Transformer Tools. We used this framework to reduce the overarching challenge of extracting Tool inputs from free text with LLMs. Ultimately, our Agent can compose Tools to achieve still more complex goals.

Our first Agent is a simple tool to apply a custom classifier to a directory of images. Check out the HuggingFace Space for our RemyxClassifierTool or our Google colab notebook.

Our colab notebook showed that anybody could apply computer vision, and we saw how expert LLMs equipped with Tools can automate all kinds of data processing workflows. But first, let’s consider a toy problem everyone faces sharing similar characteristics: video processing workflows.

Commanding Multimedia

Video processing generally entails a sequence of modular transformations of multimedia as we stream from a source to a sink, which might include a container file or socket.

FFmpeg is the most powerful command-line tool for working with multimedia, but most people need help remembering all the recipes to use it. And so, we created dozens of Tools wrapping commands using ffmpeg-python, giving rise to FFMPerative: Chat for Video Processing.

Using FFMPerative, you express your intent naturally, allowing the LLM match to the correct video processing recipe using ffmpeg-python.

For example, we typically have a source of images and want to make an mp4 or gif. We can easily accomplish this task using FFMPerative like:

ffmp do -p "make a video from '/path/to/image_dir' called '/path/to/my_video.gif'"

Since people often watch videos with muted audio, we often want to merge or mux the text from .srt files with the video of your .mp4 for closed captioning. Tools like whisperX extend OpenAI’s whisper with additional audio alignment steps to achieve more precise word-level timestamps. This extended pipeline generates .srt files with shorter, more readable captions than the original whisper. We made a Tool to wrap the FFmpeg commands necessary to merge these files for close captioning:

ffmp do -p "merge subtitles '/path/to/captions.srt' with video '/path/to/my_video.mp4' calling it '/path/to/my_video_captioned.mp4'"

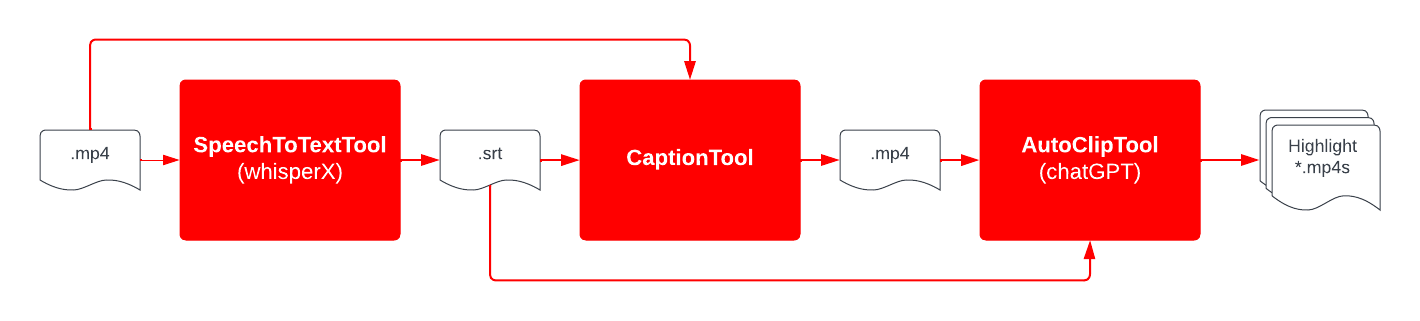

A popular marketing strategy works by trimming long-form videos into dozens of short & shareable clips to drive brand engagement on social media. We experiment by automating this service with a Tool to process timestamped text from .srt files with powerful LLMs like gpt-4. This helps to select clips using FFmpeg after tasking chatGPT to isolate the most exciting dialogue segments.

The pipeline which extracts text, applies captioning, and selects the most engaging highlight clips can be expressed using 3 Tools with a command like:

ffmp do -p "Use STT to caption 'remyx_demo.mp4' naming the srt 'remyx_caps.srt'. Step 2, add the subtitles and video naming the result 'remyx_subs.mp4'. Lastly, edit clips from that video based on the .srt"

In this way, generative AI can help lower content creation costs with a video editing copilot to assist in producing human-made content.

The Remyx Agent

By analogy, we envision experiments taking place at the pace of conversation using LLMs with Tools specialized in automating the composition of general data processing workflows. Recent research from Microsoft and Meta show that LLMs perform best in task automation or code completion after additional specialization using key libraries and APIs.

With efficient fine-tuning techniques, we can bolster model expertise with these workflows and associated tools to bias the LLM toward better task performance. Just as we can expect a boost in LLM performance after training with samples that include chain-of-thought reasoning, we can build more abstract workflows with expert recipes that compose tools in practical and valuable ways.

We see the Remyx Agent as the workhorse of this future! By building up our suite of Tools for transforming data, users can experiment and adapt their MLOps through chat, supported by a copilot with data-processing expertise!